Table of Links

-

Introduction

SIMILAR WORK

Pioneering works in the field of RL hedging are given by Halperin (2017) and Kolm and Ritter (2019). Halperin (2017) approximates a parametrized optimal 𝑄-function with fitted 𝑄- learning, and Kolm and Ritter (2019) use a function-approximation SARSA method by fitting a non-linear function of the form 𝑦 = 𝑄(𝑋) to approximate the 𝑄-function, where 𝑋 is the current state-action pair (𝑠, 𝑎). In terms of those who use DRL to solve the option hedging problem, Du et al. (2020) and Giurca and Borovkova (2021) use DQN to approximate the optimal 𝑄-function. Studies employing the policy method of DDPG include Cao et al. (2021), Assa, Kenyon, and Zhang (2021), Xu and Dai (2022), and Fathi and Hientzsch (2023). Cao et al. (2023) extend DDPG in their DRL hedging study, as instead of estimating the 𝑄-value, the critic network uses quantile regression (QR) to estimate the distribution of discrete fixed-point rewards.

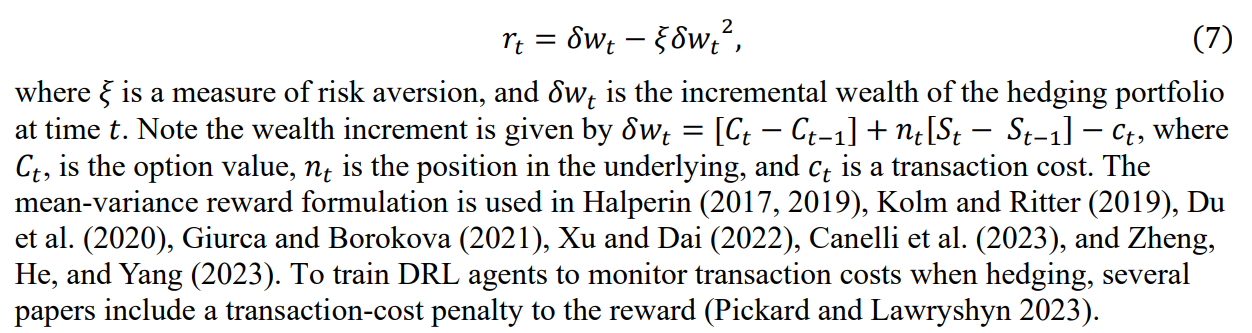

In the DRL hedging literature, most studies include the current asset price, time-to-maturity, and current holding in the state-space (Pickard and Lawryshyn 2023). Vittori et al. (2020), Giurca and Borovkova (2021), Xu and Dai (2022), Fathi and Hientzch (2023), and Zheng, He, and Yang (2023) include the BS Delta in the state-space for their study, while several papers explicitly explain that the BS Delta can be deduced from the option and stock prices and its inclusion only serves to unnecessarily augment the state (Cao et al. (2021), Kolm and Ritter (2019), Du et al. (2020)). As for the reward function, the mean-variance reward formulation is commonly used to provide feedback to the agent at each time step. A generalized version of the mean-variance reward for a single time-step 𝑡 is given as

Given a defined state-space, action-space, and reward, a DRL agent needs experience in the form of data to learn optimal policies and value functions. In the dynamic hedging environment, the data may be simulated or empirical, i.e., market data. In a simulated case, the underlying asset follows a stochastic process such as GBM or a stochastic volatility model such as the SABR (Stochastic Alpha, Beta, Rho) (Hagan et al. 2002) or Heston (1993) models. Monte Carlo simulations of the GBM process are employed for data generation in most studies in the RL hedging literature (Pickard and Lawryshyn 2023). To train DRL agents for an environment with stochastic volatility, Cao et al. (2021) use a SABR model, and the Heston model is used to generate experimental data in Mikkilä and Kanniainen (2023), Murray et al. (2022), Xiao, Yao, and Zhou (2021), and Giurca and Borovkova (2021). It is noted that no papers in the literature calibrate the stochastic volatility model parameters to market data.

Consistent results emerge across the DRL hedging literature when training and testing on simulated data in that DRL agents trained with a transaction cost penalty outperform the traditional BS Delta hedging strategy in frictional markets (Pickard and Lawryshyn 2023). While Giurca and Borovkova (2021), Xiao, Yao, and Zhou (2021), Zheng, He, and Yang (2023), and Mikkilä and Kanniainen (2023) train their agents on simulated data, each of these studies also performs a sim-to-real test in which they test the simulation trained DRL agent on empirical data. Notably, Xiao, Yao, and Zhou (2021), Mikkilä and Kanniainen (2023), and Zheng, He, and Yang (2023) achieve desirable results in the sim-to-real test, while Giurca and Borovkova (2021) do not. In addition to using empirical data for a sim-to-real test, Mikkilä & Kanniainen (2023) train their DRL agent using the empirical dataset. Others who train a DRL agent with empirical data are Pham, Luu, and Tran (2021) and Xu and Dai (2022). Pham, Luu, and Tran (2021) show that their DRL agent has a profit higher than the market return when testing with empirical prices. Of further note, Xu and Dai (2022) train and test their DRL agent with empirical American option data, which is not seen elsewhere in the DRL hedging literature. However, Xu and Dai (2022) do not change their DRL approach in any manner when considering American versus European options. There have been no encountered studies that train DRL agents specifically to hedge American options by incorporating exercise boundaries into the training and testing process. This observation that there is an absence of literature on DRL for hedging American options is supported by two literature reviews that analyze the use of DRL for dynamic option hedging (Pickard and Lawryshyn 2023, Liu 2023).

Authors:

(1) Reilly Pickard, Department of Mechanical and Industrial Engineering, University of Toronto, Toronto, ON M5S 3G8, Canada ([email protected]);

(2) Finn Wredenhagen, Ernst & Young LLP, Toronto, ON, M5H 0B3, Canada;

(3) Julio DeJesus, Ernst & Young LLP, Toronto, ON, M5H 0B3, Canada;

(4) Mario Schlener, Ernst & Young LLP, Toronto, ON, M5H 0B3, Canada;

(5) Yuri Lawryshyn, Department of Chemical Engineering and Applied Chemistry, University of Toronto, Toronto, ON M5S 3E5, Canada.

This paper is